Preface

Designing and implementing a datacenter remains one of the most challenging and fulfilling projects of my career. It has been three years since we installed the current iteration—our third location after moving from EvoSwitch in Manassas, VA, to AiNET in Beltsville, MD, and finally to CoreSite in Reston, VA.

Initially in Manassas

Our first installation was a single rack in a co-location. We constantly watched the clock to avoid 495 rush hour traffic. The setup was simple:

- A single ASA firewall

- An IBM PureFlex system (managing Power and Intel nodes)

- A few IBM Power and Windows servers

- Cisco switches handling VLANs and networking

IBM eventually discontinued the PureFlex system, but at the time, it provided a “single pane of glass” for management.

Next: AiNET

Growth and management issues in Manassas drove us to a location closer to our main office. Since the Flex system was discontinued, we moved individual servers and switched to redundant firewalls using pfSense.

We preferred pfSense over ASA for its flexibility, specifically the ability to run as a VM on VMware. We virtualized Intel workloads on VMware and Power workloads on IBM i. While we lost our single management pane (using vCenter for VMware and HMC for Power), we continued to expand.

My colleague Bill Harrison handled networking while I managed IBM Power configurations. We collaborated on VMware. We hosted mail servers, websites, and private servers, separated by VLANs and virtual firewalls. Although using DoD addresses caused some NAT-T issues, we learned a lot. When Bill moved on, I took over the infrastructure, having learned enough Cisco CLI to manage the growth.

On to CoreSite

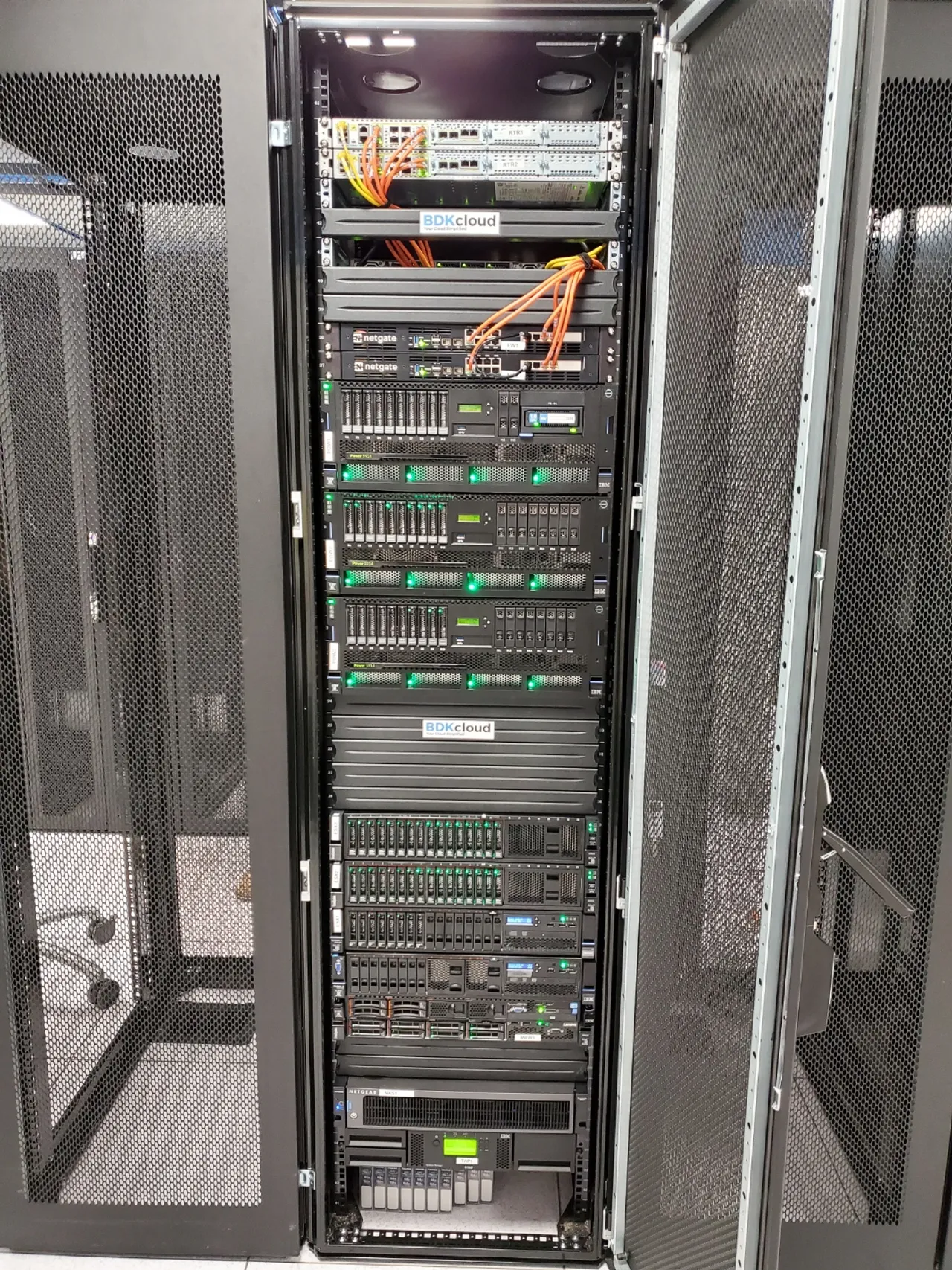

Before moving to CoreSite, we hired Tim, a Cisco Certified Instructor. Together, we planned a complete migration from AiNET. We spent months designing a redundant, high-speed infrastructure that eliminated DoD addresses and virtual firewalls. We opted for Netgate hardware for pfSense, ensuring every server had multiple connections to each switch.

The Build

- Firewalls: High Availability (HA) pair of Netgate firewalls using pfSense.

- Switches: Pair of Cisco Nexus switches with LAGG groups to firewalls for redundancy.

- VLANs: Trunk mode ports to support virtualization.

- VPC: Configured on switches for consistency.

We tested the new setup with new servers, confirming throughput and power redundancy. Then, we scheduled a 12-hour overnight window for the move.

Tim, our coworker Jesse, and I drove to Beltsville, un-racked the equipment, and transported it to Reston. We immediately began racking.

- Power Servers: Connected via LACP LAGG groups to Nexus switches. (IBM documentation only confirmed this for Catalyst switches, but we made it work).

- Intel Servers: Network load balancing configured within VMware, bypassing the need for LAGG groups.

We separated the hypervisor and customer LPARs onto different VLANs. Once everything was tested and confirmed running, we met our deadline.

Looking Back

I am very pleased with our final configuration. Maintenance is straightforward and easy to teach. Documentation allows us to add new networks and customers with minimal effort.

- Performance: IBM Power servers with 4-port LAGG show <1ms latency to public services.

- Storage: VMware stack evolved into a vSAN solution with NVMe SSDs for speed and redundancy.

- Uptime: We can perform maintenance and apply firmware updates during the day without downtime.

This project stands as a highlight of my IT career.